From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into sample notebooks on GitHub to try something new. You can also start your free trial or run Elasticsearch locally today.

In this guide, we are going to walk through how to build an image retrieval system using KNN clustering in Elasticsearch and CLIP embeddings computed with Roboflow Inference, a computer vision inference server.

Roboflow Universe, the largest repository of computer vision data on the web with more than 100 million images hosted, uses CLIP embeddings to enable efficient, semantic queries for our dataset search engine.

Without further ado, let’s get started!

Introduction to CLIP and Roboflow Inference

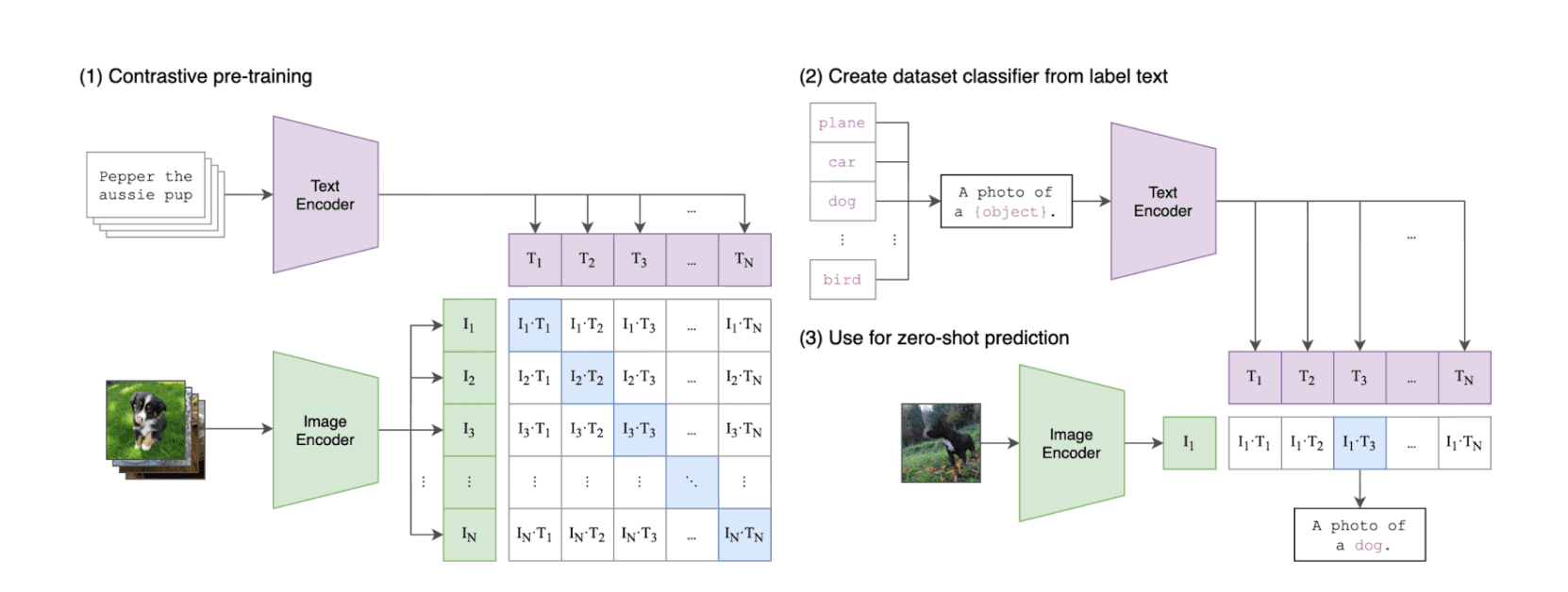

CLIP (Contrastive Language-Image Pretraining) is a computer vision model architecture and model developed by OpenAI. The model was released in 2021 under an MIT license.

The model was trained “to predict the most relevant text snippet, given an image”. In so doing, CLIP learned to identify the similarity between images and text with the vectors the model uses. CLIP maps images and text into the vector space. This allows vectors to compare and find images similar to a text query, or images similar to another image.

The advancement of multimodal models like CLIP has made it easier than ever to build a semantic image search engine.

Models like CLIP can be used to create “embeddings” that capture semantic information about an image or a text query. Vector embeddings are a type of data representation that converts words, sentences, and other data into numbers that capture their meaning and relationships.

Roboflow Inference is a high-performance computer vision inference server. Roboflow Inference supports a wide range of state-of-the-art vision models, from YOLO11 for object detection to PaliGemma for visual question answering to CLIP for multimodal embeddings.

You can use Roboflow Inference with a Python SDK, or in a Docker environment.

In this guide, we will use Inference to calculate CLIP embeddings, then store them in an Elasticsearch cluster for use in building an image retrieval system.

Prerequisites

To follow this guide, you will need:

- An Elasticsearch instance that supports KNN search

- A free Roboflow account

- Python 3.12+

We have prepared a Jupyter Notebook that you can run on your computer or on Google Colab for use in following along with this guide. Open the notebook.

Step #1: Set up an Elasticsearch index with KNN support

For this guide, we will use the Elasticsearch Python SDK. You can install it using the following code:

If you don’t already have an Elasticsearch cluster set up, refer to the Elasticsearch documentation to get started.

Once you have installed the SDK and set up your cluster, create a new Python file and add the following code to connect to your client:

To run embedding searches in Elasticsearch, we need an index mapping that contains a dense_vector property type. For this guide, we will create an index with two fields: a dense vector that contains the CLIP embedding associated with an image, and a file name associated with an image.

Run the following code to create your index:

The output should look similar to the following:

The default index type used with KNN search is L2 Norm, also known as Euclidean distance. This distance metric doesn’t work well for CLIP similarity. Thus, above we explicitly say we want to create a cosine similarity index. CLIP embeddings are best compared with cosine similarity.

For this guide, we will use a CLIP model with 512 dimensions. If you use a different CLIP model, make sure that you set the dims value to the number of dimensions of the vector returned by the CLIP model.

Step #2: Install Roboflow Inference

Next, we need to install Roboflow Inference and supervision, a tool for working with vision model predictions. You can install the required dependencies using the following command:

This will install both Roboflow Inference and the CLIP model extension that we will use to compute vectors.

With Roboflow Inference installed, we can start to compute and store CLIP embeddings.

Step #3: Compute and store CLIP embeddings

For this guide, we are going to build a semantic search engine for the COCO 128 dataset. This dataset contains 128 images sampled from the larger Microsoft COCO dataset. The images in COCO 128 are varied, making it an ideal dataset for use in testing our semantic search engine.

To download COCO 128, first create a free Roboflow account. Then, navigate to the COCO 128 dataset page on Roboflow Universe, Roboflow’s open computer vision dataset community.

Click “Download Dataset”:

Choose the “YOLOv8” format. Choose the option to show a download code:

Copy the terminal command to download the dataset. The command should look something like this:

When you run the command, the dataset will be downloaded to your computer and unzipped.

We can now start computing CLIP embeddings.

Add the following code from your Python file from earlier, then run the full file:

This code will loop through all images in the train split of the COCO 128 dataset and run them through CLIP with Roboflow Inference. We then index the vectors in Elasticsearch alongside the file names related to each vector.

It may take 1-2 minutes for the CLIP model weights to download. Your script will pause temporarily while this is done. The CLIP model weights are then cached on your system for future use.

Note: When you run the code above, you may see a few warnings related to ExecutionProviders. This relates to the optimizations available in Inference for different devices. For example, if you deploy on CUDA the CoreMLExecutionProvide will not be available so a warning is raised. No action is required when you see these warnings.

Step #4: Retrieve data from Elasticsearch

Once you have indexed your data, you are ready to run a test query!

To use a text as an input, you can use this code to retrieve an input vector for use in running a search:

To use an image as an input, you can use this code:

For this guide, let’s run a text search with the query “coffee”.

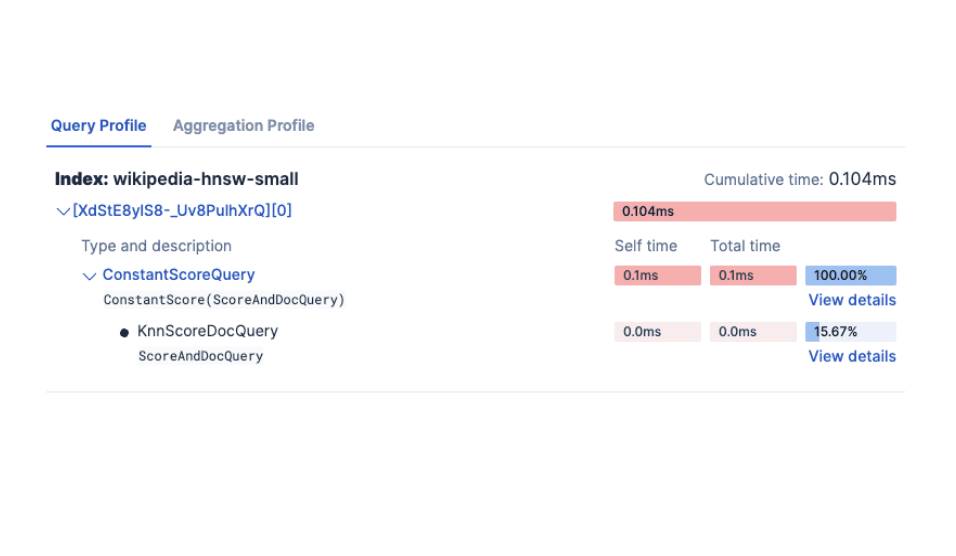

We are going to use a k-nearest neighbours (KNN) search. This search type accepts an input embedding and finds values in our database whose embeddings are similar to the input. KNN search is commonly used for vector comparisons.

KNN search always returns the top k nearest neighbours. If k = 3, Elasticsearch will return the three most similar documents to the input vector.

With Elasticsearch, you can retrieve results from a large vector store in milliseconds.

We can run a KNN search with the following code:

The k value above indicates how many of the nearest vectors should be retrieved from each shard. The size parameter of a query determines how many results to return. Since we are working with one shard for this demo, the query will return three results.

Our code returns:

We have successfully run a semantic search and found images similar to our input query! Above, we can see the three most similar images: a photo of a coffee cup and a cake on a table outdoors, then two duplicate images in our index with coffee cups on tables.

Conclusion

With Elasticsearch and the CLIP features in Roboflow Inference, you can create a multimodal search engine. You can use the search engine for image retrieval, image comparison and deduplication, multimodal Retrieval Augmented Generation with visual prompts, and more.

Roboflow uses Elasticsearch and CLIP extensively at scale. We store more than 100 million CLIP embeddings and index them for use in multimodal search for our customers who want to search through their datasets at scale. Through the growth of data on our platform from hundreds of images to hundreds of millions, Elasticsearch has scaled seamlessly.

To learn more about using Roboflow Inference, refer to the Roboflow Inference documentation. To find data for your next computer vision project, check out Roboflow Universe.

Frequently Asked Questions

What is CLIP (Contrastive Language-Image Pretraining)?

CLIP (Contrastive Language-Image Pretraining) is a computer vision model architecture and model developed by OpenAI. It was trained “to predict the most relevant text snippet, given an image.”

What is Roboflow Inference?

Roboflow Inference is a high-performance computer vision inference server.