Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

Translating a dataset from one language to another can be a powerful tool. You can gain insights into a dataset you previously might not have been able to, such as detecting new patterns or trends. Using LangChain, you can take a dataset and translate it into the language of your choice. After your dataset has been translated, you can use Elastic’s vector database to gain insight.

This blog post will walk you through how to load data into a DataFrame using Pandas, translate the data from one language to another using LangChain, load the translated data into Elasticsearch, and use Elastic’s vector database capabilities to learn more about your dataset. The full code for this example can be found on the Search Labs GitHub repository.

Setting up your environment for dataset translation

Configure an environment variable for your OpenAI API Key

First, you will want to configure an environment variable for your OpenAI API Key, which you can find on the API keys page in OpenAI's developer portal. You will need this API Key to work with LangChain. You can find more information on getting started in the LangChain quick start guide.

Mac/Unix:

Windows:

Set up Elasticsearch

This demo uses Elasticsearch version 8.15, but you can use any version of Elasticsearch that is higher than 8.0. If you are new, check out our Quick Start on Elasticsearch and the documentation on the integration between LangChain and Elasticsearch.

Python version

The version of Python that is used is Python 3.12.1 but you can use any version of Python higher than 3.9.

Install the required packages

The packages you will be working with are as follows:

- Jupyter Notebooks to work with the dataset interactively.

- nest_asyncio for asynchronous execution for processing your dataset.

- pandas for data manipulation and cleaning of the dataset used.

- To integrate natural language processing capabilities, you will use the LangChain library.

- To work with Elasticsearch, you will use Elasticsearch Python Client to connect to Elasticsearch.

- The langchain-elasticsearch package allows for an extra level of interaction between LangChain and Elasticsearch.

- You will need to install the TikToken package, which is used under the hood to break text into manageable pieces for efficient further processing.

- The datasets package will allow you to easily work with a dataset from Hugging Face.

You can run the following command in your terminal to install the required packages for this blog post.

Dataset

The dataset used is a collection of news articles in Spanish, known as the DACSA corpus. Below is sample of what the dataset looks like:

You will need to authenticate with Hugging Face to use this dataset. You first will need to create a token. The huggingface-cli is installed when you install the datasets package in the first step.

After doing so, you can log in from the command line as follows:

If you don't have a token already you will be prompted to create one from the command line interface.

Loading a Jupyter notebook

You will want to load a Jupyter Notebook to work with your data interactively. To do so, you can run the following command in your terminal.

In the right-hand corner, you can select where it says “New” to create a new Jupyter Notebook.

Translate dataset column from Spanish to English

The code in this section will first load data from a dataset into a Pandas DataFrame and create a subset of the dataset that contains only 25 records. Once your dataset is ready, you can set up a role to allow your model to act as a translator and create an event loop that will translate a column of your dataset from Spanish to English.

The subset that is being used is only 25 records to avoid hitting OpenAI’s rate limits. You may need to use batch loading if you are using a larger dataset.

Import packages

In your Jupyter Notebook, you will first want to import the following packages, including asyncio, which allows you to use async functions, and openai to work with models from OpenAI.

Additionally, you will want to import the following packages, which also include getpass to keep your secrets secure and functools, which will help create an event loop to translate your dataset.

In this code sample, you will create an event loop, which will allow you to translate many rows of a dataset at once using nest_asyncio. Event loops are a core construct of asyncio, they run within a thread and will execute all tasks inside of a thread. Before you can create an event loop you first need to run the following line of code.

Loading in your dataset

You can create a variable called ds, which loads in the ELiRF/dacsa dataset in Spanish. Later in this blog post, you will translate this dataset. This dataset contains articles in Catalan and Spanish, but for this blog post, you will only use the records in Spanish.

The output will show the different datasets available, what columns each has, and how many rows.

Now that the data is loaded, you can translate it into a Pandas DataFrame to make it easier to work with. Since this dataset contains almost 2000 rows, you can create a sample of the dataset to make it smaller for the purposes of this blog post.

You can now view the first 5 rows of the dataset to ensure everything has been properly loaded.

The output should look something like this:

Translating dataset from one language to another

You will want to create an async function to create an event loop, allowing you to translate the data seamlessly. Since you will be using GPT-4o, you will want to set a role to tell your model to act like a translator and another to give directions to translate your data from Spanish to English. You will translate the data from two specified columns of the dataset and add new columns with the translated data back to the original dataset.

Finally, you can run the event loop and translate the specified column of your dataset. This dataset will now have columns entitled “translated summary” and “translated article” that contains the translation of the summaries and articles loaded.

To confirm your data has been translated you can run the head.() method again.

You will now see a new column called translated_summary containing the translation of the summary and another column entitled translated_article containing the translations of the articles from Spanish to English.

Loading the translated articles into a vector database and searching

A vector database allows you to find similar data quickly. It stores vector embeddings, a type of vector data representation that converts words, sentences, and other data into numbers that capture their meaning and relationships. In this section, you will learn how to load data into an Elasticsearch vector database, and perform searches on your newly translated dataset.

Authenticate to Elasticsearch

Now, you can use the Elasticsearch Python client to establish a secure connection to Elasticsearch. You will want to pass in your Elasticsearch host and port, and API key.

Create an index

Before you can load your data into a vector database, you must create an index. You will first create a variable to name your index. From there, check to see if an index exists. If one already does exist, it will delete your index and allow you to create a new index without error.

Adding embeddings

Embeddings leverage a machine learning model to translate text into numbers, allowing you to perform vector searches. You must also set up your index to be used as a vector database.

At this point, you will want to set the embedding variable to OpenAI Embeddings. You will also want to specify the model used as text-embedding-3-large.

Loading data

At this point, you will want to load your translated data into a Python list, allowing you to load the data into the vector database. You can use the LangChain library to turn characters into text and, from there, load the data into a vector database. I chose this method because its ability to handle long documents by splitting text into smaller chunks helps manage memory and processing power efficiently, and the fact that you control how text is split.

Performing searches

You can now ask questions about the data, such as, "What happened in Spain?" You will now be able to get results from your dataset similar to your question.

The output you get back should look something like this:

You can find the complete output for this query here.

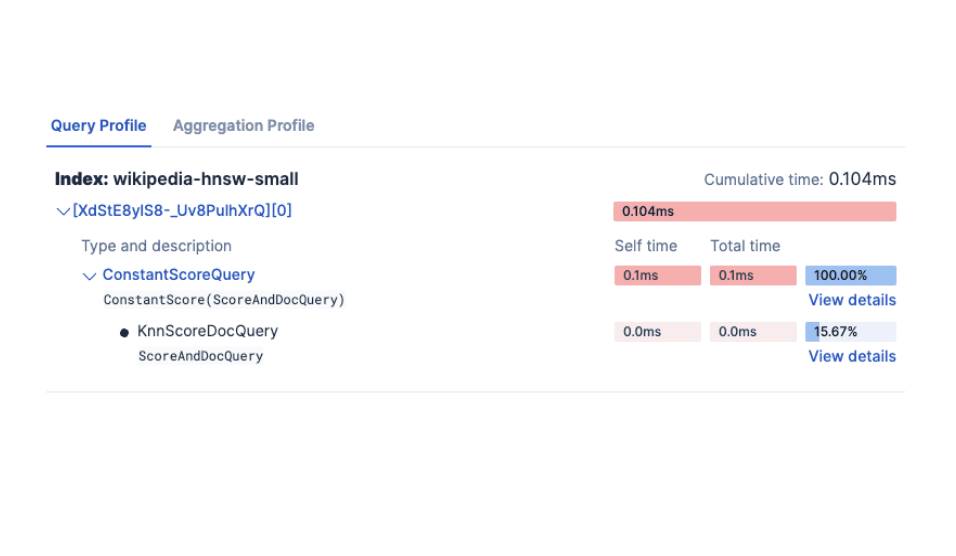

Since you are using kNN by default, if you change the value of k, which is the number of global nearest neighbors to retrieve, you will return more values.

The output should look similar to the following:

You can check out the complete output for this query if needed.

You can also adjust the num_candidates field to the number of approximate nearest neighbor candidates on each shard. You can check out our blog post on the subject for more information. If you are looking to tune this a bit more, you may want to check out our documentation on tuning an approximate KNN search.

Conclusion

This is just the start of how you can utilize Elastic’s vector database capabilities. To learn more about what’s available be sure to check out our resource on the subject. By leveraging LangChain, and Elastic’s vector database capabilities, you can draw insights from a dataset that may contain a language you are not familiar with. In this article, we were able to ask questions regarding specific locations mentioned in the text and receive responses in the translated English text. To dig in deeper on vector database capabilities you may want to check out our tutorial. Additionally you can find another tutorial on working with multilingual datasets on Search Labs as well as this post which walks you through how to build multilingual RAG with Elastic and Mistral.

The full code for this example can be found on the Search Labs GitHub repository. Let us know if you built anything based on this blog or if you have questions on our forums and the community Slack channel.