Elasticsearch has native integrations with the industry-leading Gen AI tools and providers. Check out our webinars on going Beyond RAG Basics, or building prod-ready apps with the Elastic vector database.

To build the best search solutions for your use case, start a free cloud trial or try Elastic on your local machine now.

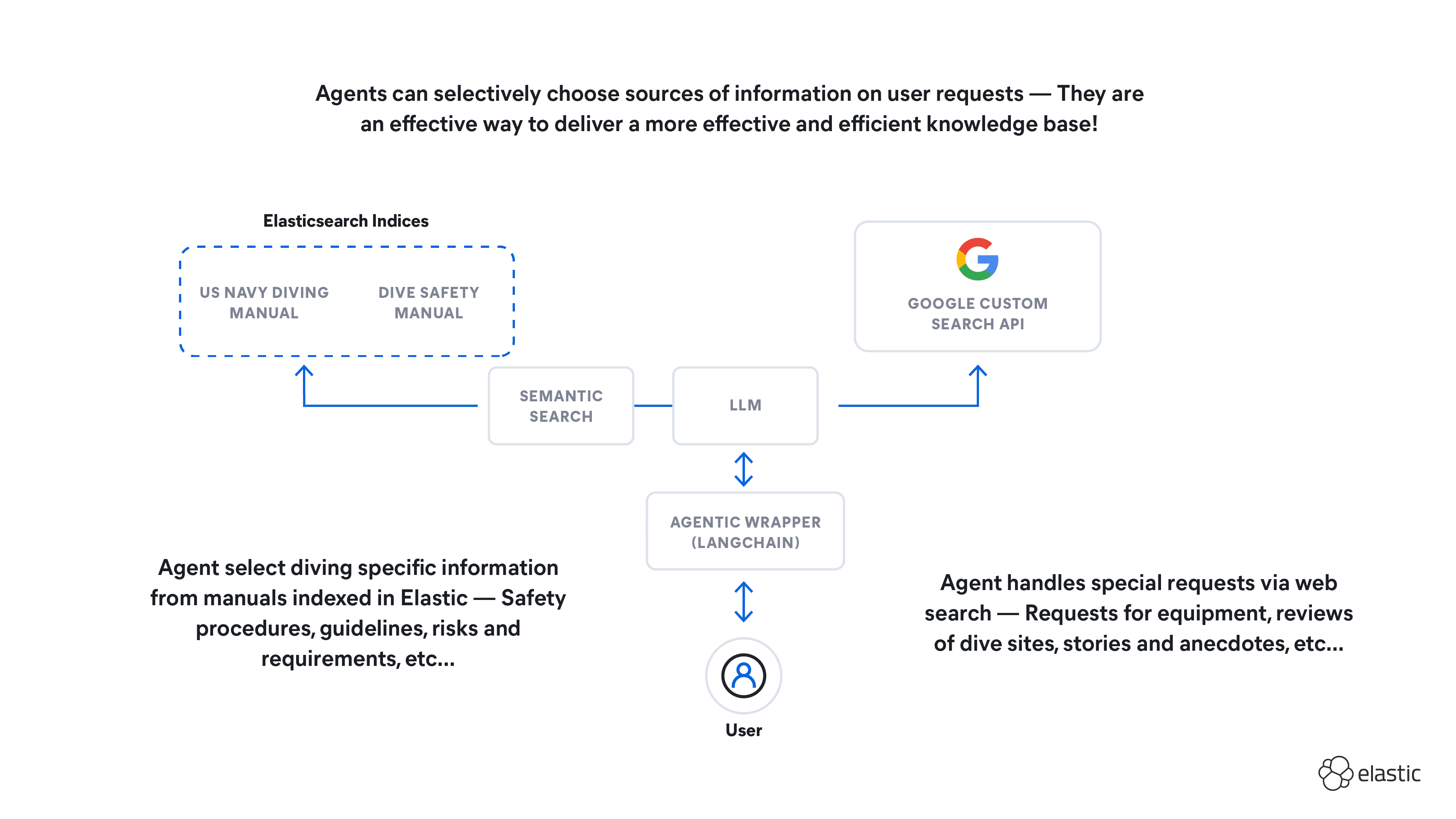

In the last year, we have seen a lot of movement in generative AI. Many new services and libraries have emerged. LangChain has separated itself as the most popular library for building applications with large language models (LLMs), for example Retrieval Augmented Generation (RAG) systems. The library makes it really easy to prototype and experiment with different models and retrieval systems.

To enable the first-class support for Elasticsearch in LangChain, we recently elevated our integration from a community package to an official LangChain partner package. This work makes it straightforward to import Elasticsearch capabilities into LangChain applications. The Elastic team manages the code and the release process through a dedicated repository. We will keep improving the LangChain integration there, making sure that users can take full advantage of the latest improvements in Elasticsearch.

Our collaboration with Elastic in the last 12 months has been exceptional, particularly as we establish better ways for developers and end users to build RAG applications from prototype to production," said Harrison Chase, Co-Founder and CEO at LangChain. "The LangChain-Elasticsearch vector database integrations will help do just that, and we're excited to see this partnership grow with future feature and integration releases.

Elasticsearch is one of the most flexible and performant retrieval systems that includes a scalable data store and vector database. One of our goals at Elastic is to also be the most open retrieval system out there. In a space as fast-moving as generative AI, we want to have the developer's back when it comes to utilizing emerging tools and libraries. This is why we work closely with libraries like LangChain and add native support to the GenAI ecosystem. From using Elasticsearch as a vector database to hybrid search and orchestrating a full RAG application.

Elasticsearch and LangChain have collaborated closely this year. We are putting our extensive experience in building search tools into making your experience of LangChain easier and more flexible. Let's take a deeper look in this blog.

Rapid RAG prototyping with Elasticsearch & LangChain

RAG is a technique for providing users with highly relevant answers to questions. The main advantages over using LLMs directly are that user data can be easily integrated, and hallucinations by the LLM can be minimized. This is achieved by adding a document retrieval step that provides relevant context for the LLM.

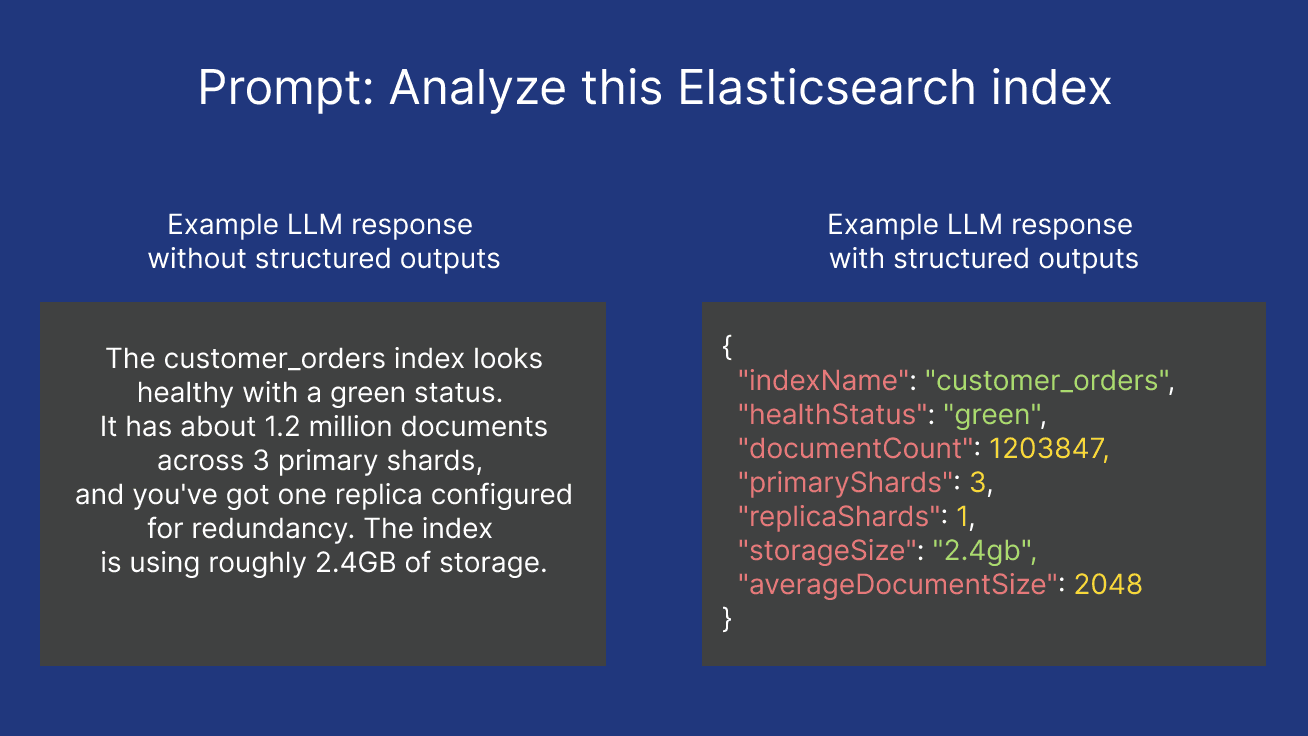

Since its inception, Elasticsearch has been the go-to solution for relevant document retrieval and has since been a leading innovator, offering numerous retrieval strategies. When it comes to integrating Elasticsearch into LangChain, we have made it easy to choose between the most common retrieval strategies, for example, dense vector, sparse vector, keyword or hybrid. And we enabled power users to further customize these strategies. Keep reading to see some examples. (Note that we assume we have an Elasticsearch deployment.)

Langchain-Elasticsearch integration package

In order to use the langchain-elasticsearch partner package, you first need to install it:

Then you can import the classes you need from the langchain_elasticsearch module, for example, the ElasticsearchStore, which gives you simple methods to index and search your data. In this example, we use Elastic's sparse vector model ELSER (which has to be deployed first) as our retrieval strategy.

Building a simple RAG application

Now, let's build a simple RAG example application. First, we add some example documents to our Elasticsearch store.

Next, we define the LLM. Here, we use the default gpt-3.5-turbo model offered by OpenAI, which also powers ChatGPT.

Now we are ready to plug together our RAG system. For simplicity we take a standard prompt for instructing the LLM. We also transform the Elasticsearch store into a LangChain retriever. Finally, we chain together the retrieval step with adding the documents to the prompt and sending it to the LLM.

With these few lines of code, we now already have a simple RAG system. Users can now ask questions on the data:

It's as simple as this. Our RAG system can now respond with info about LangChain, which ChatGPT (version 3.5) cannot. Of course there are many ways to improve this system. One of them is optimizing the way we retrieve the documents.

LangChain and the Elasticsearch retriever

The Elasticsearch store offers common retrieval strategies out-of-the-box, and developers can freely experiment with what works best for a given use case. But what if your data model is more complex than just text with a single field? What, for example, if your indexing setup includes a web crawler that yields documents with texts, titles, URLs and tags and all these fields are important for search? Elasticsearch's Query DSL gives users full control over how to search their data. And in LangChain, the ElasticsearchRetriever enables this full flexibility directly. All that is required is to define a function that maps the user input query to an Elasticsearch request.

Let's say we want to add semantic reranking capabilities to our retrieval step. By adding a Cohere reranking step, the results at the top become more relevant without extra manual tuning. For this, we define a Retriever that takes in a function that returns the respective Query DSL structure.

(Note that the query structure for similarity reranking is still being finalized. It will be available in an upcoming release.)

This retriever can slot seamlessly into the RAG code above. The result is that the retrieval part of our RAG pipeline is much more accurate, leading to more relevant documents being forwarded to the LLM and, most importantly, to more relevant answers.

Conclusion

Elastic's continued investment into LangChain's ecosystem brings the latest retrieval innovations to one of the most popular GenAI libraries. Through this collaboration, Elastic and LangChain enable developers to rapidly and easily build RAG solutions for end users while providing the necessary flexibility for in-depth tuning of results quality.