From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into sample notebooks on GitHub to try something new. You can also start your free trial or run Elasticsearch locally today.

What is kNN?

Semantic search is a powerful tool for relevance ranking. It allows you to go beyond using just keywords, but consider the actual meaning of your documents and queries.

Semantic search is based on vector search. In vector search, the documents we want to search have vector embeddings calculated for them. These embeddings are calculated using machine learning models, and returned as vectors that are stored alongside our document data.

When a query is performed, the same machine learning model is used to calculate the embeddings for the query text. Semantic search consists of finding the closest results to the query, by comparing the query embeddings to the document embeddings.

kNN, or k nearest neighbors, is a technique for obtaining the top k closest results to a specific embedding.

There are two main approaches for calculating kNN for a query using embeddings: Exact and approximate. This post will help you:

- Understand what exact and approximate kNN search is

- How to prepare your index for these approaches

- How to decide which approach is best for your use case

Exact kNN: Search everything

One approach for calculating the closer results would be comparing all the existing document embeddings with the one for the query. This would ensure that we're getting the closest matches possible, as we will be comparing all of them. Our search results would be as accurate as possible, as we're considering our whole document corpus and comparing all our document embeddings with the query embeddings.

Of course, comparing against all the documents has a downside: it takes time. We will be calculating the embeddings similarity one by one, using a similarity function, over all the documents. This also means that we will be scaling linearly - having twice the number of documents will potentially take twice as long.

Exact search can be done on vector fields using script_score with a vector function for calculating similarity between vectors.

Approximate kNN: A good estimate

A different approach is to use an approximation instead of considering all the documents. For providing an efficient approximation to kNN, Elasticsearch and Lucene use Hierarchical Navigation Small Worlds HNSW.

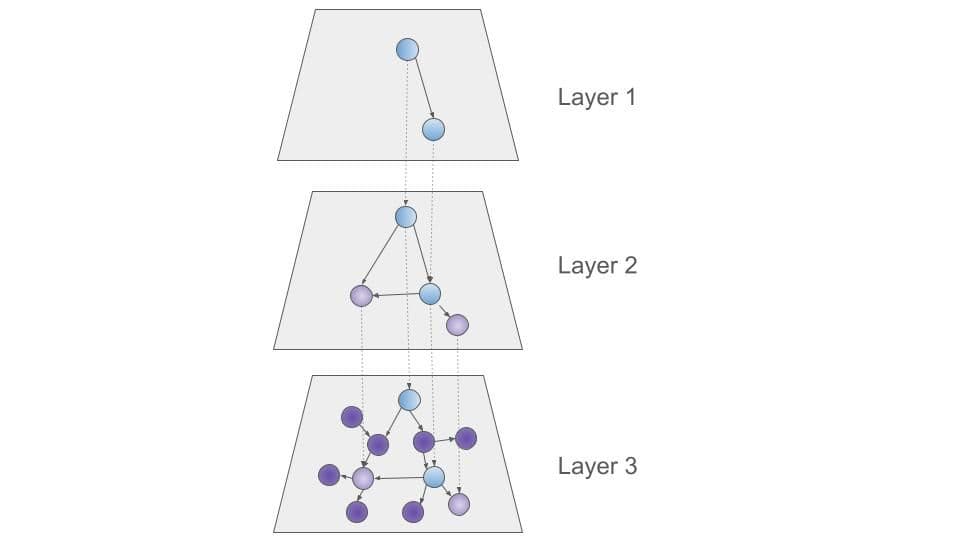

HNSW is a graph data structure that maintains links between elements that are close together, at different layers. Each layer contains elements that are connected, and are also connected to elements of the layer below it. Each layer contains more elements, with the bottom layer containing all the elements.

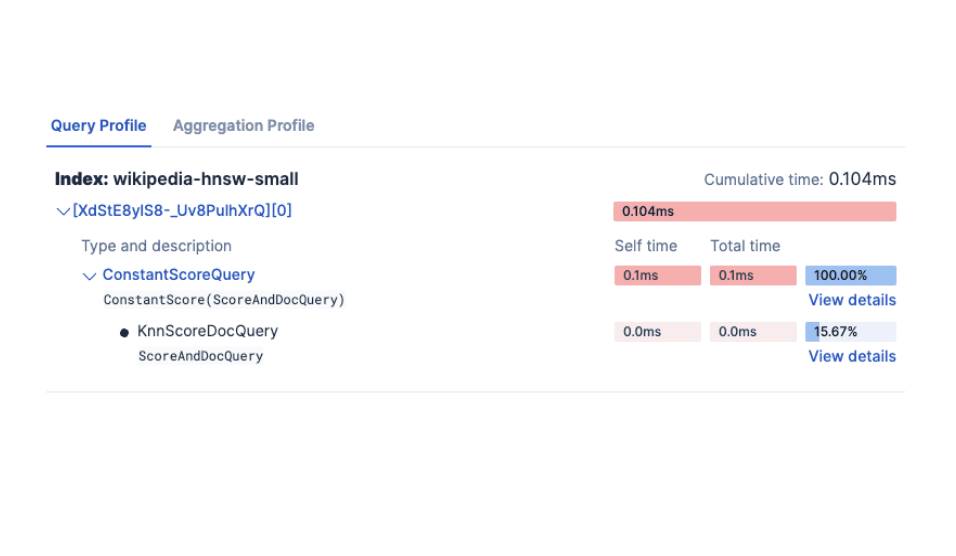

Figure 1 - An example of a HNSW graph. The top layer contains the initial nodes to start the search. These initial nodes serve as entry points to the lower layers, each containing more nodes. The lower layer contains all nodes.

Think of it as driving; there are highways, roads and streets. When driving on a highway you will see some exit signs that describe some high-level areas (like a town or a neighborhood). Then you get to a road that has directions for specific streets. Once you get to a street, you can reach a specific address and the ones that are in the same neighborhood.

HNSW is similar to that, as it creates different levels of vector embeddings. It calculates the highway that is closer to the initial query, and chooses the exits that look more promising to keep looking for the closer addresses to the one we're looking for. This is great in terms of performance, as it doesn't have to consider all documents, but uses this multi-level approach to quickly find an approximation of the closer ones.

But, it's an approximation. Not all nodes are interconnected, meaning that it's possible to overlook results that are closer to specific nodes as they might not be connected. The interconnection of the nodes depends on how the HNSW structure is created.

How good HNSW is depends on several factors:

- How it is constructed. The HNSW construction process will consider a number of candidates to track as the closer ones to a specific node. Increasing the number of candidates to consider will produce a more precise structure, at the cost of spending more time creating it at indexing time. The

ef_constructionparameter in the dense vector index_options is used for this. - How many candidates we're considering when searching. When looking for the closer results, the process will keep track of a number of candidates. The bigger this number is, the more precise it will be, and the slower our search will be. The

num_candidatesin kNN parameters controls this behavior. - How many segments we're searching. Each segment has a HNSW graph that needs to be searched for, and its results combined with the other segment graphs. Having fewer segments will mean searching fewer graphs (so it'll be faster), but will have a less diverse set of results (so it will be less precise).

Overall, HNSW offers a good tradeoff between performance and recall, and allows fine tuning both at indexing and query side.

Searching using HNSW can be done using the kNN search section in most of the situations. Using a kNN query is also possible for more advanced use cases, like:

- Combining kNN with other queries (as part of bool queries, or pinned queries)

- Using

function_scoreto fine tune the scoring - Improve aggregations and field collapse diversity

You can check about kNN query and the differences to the kNN search section in this post. We'll dive into when you'll want to use this method versus the others below.

Indexing for exact and approximate search

dense_vector field type

There are two main indexing types for dense_vector fields you can choose from for storing your embeddings:

- flat types (including flat and int8_flat) store the raw vectors, without adding HNSW data structures. dense_vectors that use flat indexing type will always use exact kNN - the kNN query will actually perform an exact query instead of an approximate one.

- HNSW types (including hnsw and int8_hnsw) create the HNSW data structure, allowing approximate kNN search to be used.

Does it mean that you can't use exact kNN with HNSW field types? Not really! You can use both exact kNN via a script_score query, or use approximate kNN via the kNN section and the kNN query. This allows more flexibility depending on your search use case.

Using HNSW field types means that the HNSW graph structure needs to be built, and that takes time, memory and disk space. If you'll just be using exact search, you can use the flat vector field type. This ensures that your embeddings are indexed optimally and use less space.

Remember to always avoid storing your embeddings in _source in any case, to reduce your storage needs.

Quantization

Using quantization, either flat (int8_flat) or HNSW (int8_hnsw) types of indexing will help you reduce your embeddings size, so you will be able to use less memory and disk storage for holding your embeddings information.

As search performance relies on your embeddings fitting as much as possible in memory, you should always look for ways of reducing your data if possible. Using quantization is a trade off between memory and recall.

How do I choose between exact and approximate kNN search?

There's no one-size-fits-all answer here. You need to consider a number of factors, and experiment, in order to get to the optimal balance between performance and accuracy:

Data size

Searching everything is not something you should avoid at all costs. Depending on your data size (in terms of number of documents and embedding dimensions), it might make sense to do an exact kNN search.

As a rule of thumb, having less than 10 thousand documents to search could be an indication that exact search should be used. Keep in mind that the number of documents to search can be filtered in advance, so the effective number of documents to search can be restricted by the filters applied.

Approximate search scales better in terms of the number of documents, so it should be the way to go if you're having a big number of documents to search, or expect it to increase significantly.

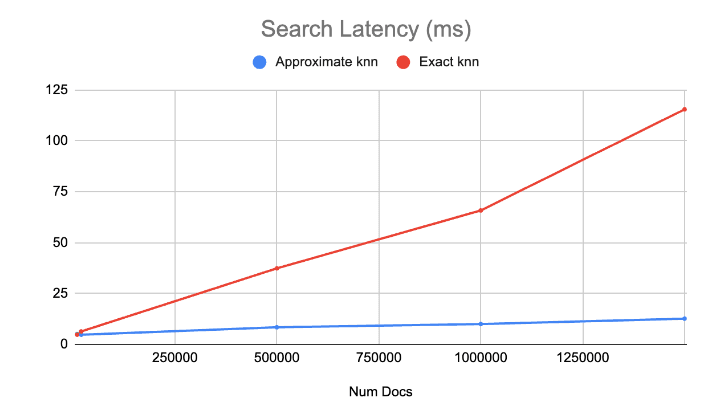

Figure 2 - an example run of exact and approximate kNN using 768 dimensions vectors from the so_vector rally track. The example demonstrates the linear runtime of exact kNN vs the logarithmic runtime of HNSW searches.

Filtering

Filtering is important, as it reduces the number of documents to consider for searching. This needs to be taken into account when deciding on using exact vs approximate. You can use query filters for reducing the number of documents to consider, both for exact and approximate search.

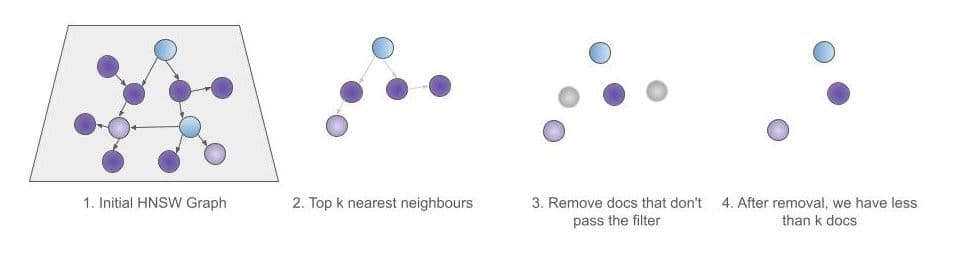

However, approximate search takes a different approach for filtering. When doing approximate searches using HNSW, query filters will be applied after the top k results have been retrieved. That's why using a query filter along with a kNN query is referred to as a post-filter for kNN.

Figure 3 - Post-filtering in kNN search.

The problem with using post-filters in kNN is that the filter is being applied after we have gathered the top k results. This means that we can end up with less than k results, as we need to remove the elements that don't pass the filter from the top k results that we've already retrieved from the HNSW graph.

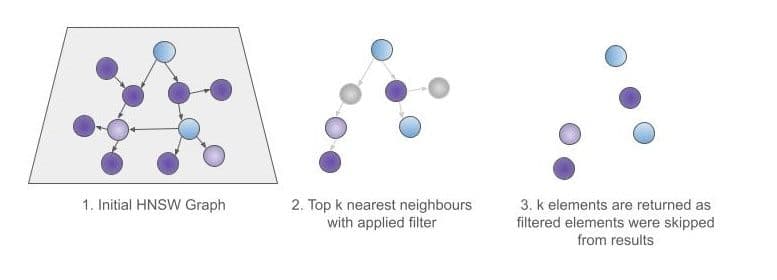

Fortunately, there is another approach to use with kNN, and is specifying a filter in the kNN query itself. This filter applies to the graph elements as the results are being gathered traversing the HNSW graph, instead of applying it afterwards. This ensures that the top k elements are returned, as the graph will be traversed - skipping the elements that don't pass the filter - until we get the top k elements.

Figure 4 - Pre-filtering in kNN search.

This specific kNN query filter is called a kNN pre-filter to specify that it is applied before retrieving results, as opposed to applying it afterwards. That's why, in the context of using a kNN query, regular query filters are referred to as post-filters.

Using a kNN pre-filter affects approximate search performance, as we need to consider more elements while searching in the HNSW graph - we will be discarding the elements that don't pass the filter, so we need to look for more elements on each search to retrieve the same number of results.

Future enhancements for kNN

There are some improvements that are coming soon that will help with exact and approximate kNN.

Elasticsearch will add the possibility to upgrade a dense_vector type from flat to HNSW. This means that you'll be able to start using a flat vector type for exact kNN, and eventually start using HNSW when you need the scale. Your segments will be searched transparently for you when using approximate kNN, and will be transformed automatically to HNSW when they are merged together.

A new exact kNN query will be added so a simple query will be used to do exact kNN for both flat and HNSW fields, instead of relying on a script score query. This will make exact kNN more straightforward.

Conclusion

So, should you use approximate or exact kNN on your documents? Check the following:

- How many documents? Less than 10 thousand (after applying filters) would probably be a good case for exact.

- Are your searches using filters? This impacts how many documents will be searched. If you need to use approximate kNN, remember to use kNN pre-filters to get more results at the expense of performance.

You can compare the performance of both approaches by indexing using a HNSW dense_vector, and comparing kNN search against a script_score for doing exact kNN. This allows comparing both methods using the same field type (just remember to change your dense_vector field type to flat in case you decide to go for exact search!)

Happy searching!

Frequently Asked Questions

How to choose between exact and approximate kNN search in Elasticsearch?

Having less than 10 thousand documents to search could be an indication that exact search should be used. Approximate search scales better in terms of the number of documents, so it should be the preferred choice if you have a big number of documents or expect it to grow significantly.

What is the difference between exact and approximate kNN search?

Exact kNN calculates the closer results by comparing all the existing document embeddings with the one for the query, ensuring accuracy but requiring more time. In contrast, approximate kNN provides an efficient approximation to kNN.