From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into sample notebooks on GitHub to try something new. You can also start your free trial or run Elasticsearch locally today.

Building software in any programming language, including Go, is committing to a lifetime of learning. Through her university and working career, Carly has dabbled in many programming languages and technologies, including the latest and greatest implementations of vector search. But that wasn't enough! So recently Carly started playing with Go, too.

Just like animals, programming languages, and your friendly author, search has undergone an evolution of different practices that can be difficult to decide between for your own search use case. In this blog, we'll share an overview of vector search along with examples of each approach using Elasticsearch and the Elasticsearch Go client. These examples will show you how to find gophers and determine what they eat using vector search in Elasticsearch and Go.

Prerequisites

To follow with this example, ensure the following prerequisites are met:

- Installation of Go version 1.21 or later

- Creation of your own Go repo with the

- Creation of your own Elasticsearch cluster, populated with a set of rodent-based pages, including for our friendly Gopher, from Wikipedia:

Connecting to Elasticsearch

In our examples, we shall make use of the Typed API offered by the Go client. Establishing a secure connection for any query requires configuring the client using either:

- Cloud ID and API key if making use of Elastic Cloud.

- Cluster URL, username, password and the certificate.

Connecting to our cluster located on Elastic Cloud would look like this:

The client connection can then be used for vector search, as shown in subsequent sections.

Vector search

Vector search attempts to solve this problem by converting the search problem into a mathematical comparison using vectors. The document embedding process has an additional stage of converting the document using a model into a dense vector representation, or simply a stream of numbers. The advantage of this approach is that you can search non-text documents such as images and audio by translating them into a vector alongside a query.

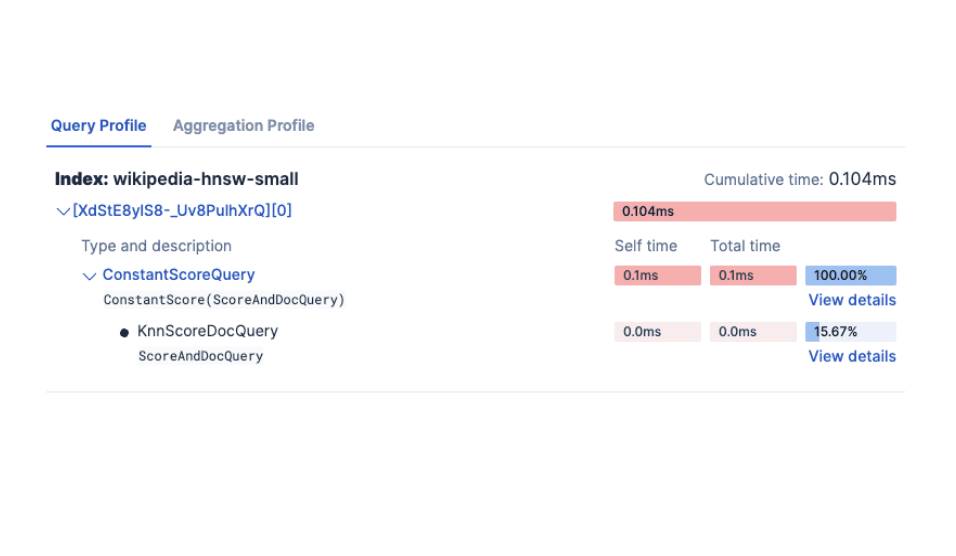

In simple terms, vector search is a set of vector distance calculations. In the below illustration, the vector representation of our query Go Gopheris compared against the documents in the vector space, and the closest results (denoted by constant k) are returned:

Depending on the approach used to generate the embeddings for your documents, there are two different ways to find out what gophers eat.

Approach 1: Bring your own model

With a Platinum license, it's possible to generate the embeddings within Elasticsearch by uploading the model and using the inference API. There are six steps involved in setting up the model:

- Select a PyTorch model to upload from a model repository. For this example, we're using the sentence-transformers/msmarco-MiniLM-L-12-v3 from Hugging Face to generate the embeddings.

- Load the model into Elastic using the Eland Machine Learning client for Python using the credentials for our Elasticsearch cluster and task type

text_embeddings. If you don't have Eland installed, you can run the import step using Docker, as shown below:

- Once uploaded, quickly test the model

sentence-transformers__msmarco-minilm-l-12-v3with a sample document to ensure the embeddings are generated as expected:

- Create an ingest pipeline containing an inference processor. This will allow the vector representation to be generated using the uploaded model:

- Create a new index containing the field

text_embedding.predicted_valueof typedense_vectorto store the vector embeddings generated for each document:

- Reindex the documents using the newly created ingest pipeline to generate the text embeddings as the additional field

text_embedding.predicted_valueon each document:

Now we can use the Knn option on the same search API using the new index vector-search-rodents, as shown in the below example:

Converting the JSON result object via unmarshalling is done in the exact same way as the keyword search example. Constants K and NumCandidates allow us to configure the number of neighbor documents to return and the number of candidates to consider per shard. Note that increasing the number of candidates increases the accuracy of results but leads to a longer-running query as more comparisons are performed.

When the code is executed using the query What do Gophers eat?, the results returned look similar to the below, highlighting that the Gopher article contains the information requested unlike the prior keyword search:

Approach 2: Hugging Face inference API

Another option is to generate these same embeddings outside of Elasticsearch and ingest them as part of your document. As this option does not make use of an Elasticsearch machine learning node, it can be done on the free tier.

Hugging Face exposes a free-to-use, rate-limited inference API that, with an account and API token, can be used to generate the same embeddings manually for experimentation and prototyping to help you get started. It is not recommended for production use. Invoking your own models locally to generate embeddings or using the paid API can also be done using a similar approach.

In the below function GetTextEmbeddingForQuery we use the inference API against our query string to generate the vector returned from a POST request to the endpoint:

The resulting vector, of type []float32 is then passed as a QueryVector instead of using the QueryVectorBuilder option to leverage the model previously uploaded to Elastic.

Note that the K and NumCandidates options remain the same irrespective of the two options and that the same results are generated as we are using the same model to generate the embeddings

Conclusion

Here we've discussed how to perform vector search in Elasticsearch using the Elasticsearch Go client. Check out the GitHub repo for all the code in this series. Follow on to part 3 to gain an overview of combining vector search with the keyword search capabilities covered in part one in Go.

Until then, happy gopher hunting!