Elasticsearch allows you to index data quickly and in a flexible manner. Try it free in the cloud or run it locally to see how easy indexing can be.

Elastic Cloud is a cloud-based managed service offering provided by Elastic. Elastic Cloud allows customers to deploy, manage, and scale their Elasticsearch clusters in Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure.

MongoDB is a popular NoSQL document-oriented database which stores data in the form of JSON-like documents.

Below is a step-by-step guide on how to ingest and sync data from the MongoDB database into Elasticsearch using the Elastic MongoDB connector.

Why Elastic

Elasticsearch is an industry leading scalable data store and vector database that allows you to store, search, and analyze large volumes of data quickly and in near real-time.

Elastic offers rich Generative-AI experience with large language models (LLMs) , extensive machine learning capabilities ,vector search and unrivaled out of the box semantic search capabilities, which MongoDB customers can use to have an exceptional search experience on their business data.

Implementation

In this blog, we will use MongoDB Atlas and use Elastic MongoDB native connector to ingest and sync data into Elastic Cloud. The connector can be used with the on-prem MongoDB version too and is compatible with MongoDB 3.6 and later.

Data flow

MongoDB setup

- If you don't have an existing setup then Create an account and deploy Atlas cluster. We will be using a free tier for data sync.

- Elastic connectors running in Elastic Cloud must have access to MongoDB Atlas cluster to fetch data. Elastic provides static IP addresses and customers can use egress IP range to restrict access to their MongoDB cluster.

You can configure IP ranges under MongoDB Atlas -> Security -> Network Access

If connector can’t access MongoDB then similar to below error will come

Note: Connector can co-exist with Elastic Traffic Filter setup. Connector is an outbound connection from Elastic Cloud to a data source whereas Traffic filter(one-way) is an outbound from cloud provider network to Elastic Cloud.

- Create a database user. User ID and password will be required to configure the connector. A read-only user with permission to access database and collection is sufficient. For testing I have used the default role.

- Load sample data (available by default in MongoDB Atlas) to create a database or use your existing database and collection to be used later in connector configuration.

Click on "Browse Collections" to verify the data. Database name and collection information will be required to set up the connector.

- Get MongoDB Atlas host information, which is required to configure the connector.

From Overview page, click on Connect:

Select Shell as a connection method:

Copy the connection string. The host name will look like this: _mongodb+srv://cluster0.xxxxxxx.mongodb.net

Elastic Cloud setup

- Create an Elastic Cloud account and deployment (If you already have one, you can skip this step.)

- Login to Kibana and go to Search -> Content -> Indices -> Create a new index.

Select "Connector" as the ingestion method.

Search for MongoDB connector. There are many native connectors available.

Give a name for your index under "Create an Elasticsearch index". Index name will be prefixed with search-

Under the Configuration tab, provide details captured during MongoDB Atlas setup.

- Enter MongoDB Atlas host name in Server hostname

- Database username and password

- Database and collection name to sync data from

Note: You can only specify one database and collection at a time.

Upon successful setup, you will see the status message below.

Note: There is a bug in v8.12.0 where SSL/TLS connection must be enabled else sync will fail.

Data sync

Once configuration is done successfully, click on the "Sync" button to perform the initial Full Content sync. For a recurring sync, configure the sync frequency under the Scheduling tab. It is disabled by default, so you'll need to toggle the Enable button to enable it. Once scheduling is done, the connector will run at configured time and pull all the content from the MongoDB database and specified collection.

Sync rules

Use sync rules and ingest pipelines to customize syncing behavior for specific indices. Each sync is a full sync and for each document the connector transforms every MongoDB field into an Elasticsearch field.

Note: Files bigger than 10MB will not be ingested and file permissions set at MongoDB level are not synced as is into the Elastic Deployment.

Search

You can validate the data ingested from MongoDB into Elastic Cloud directly from the connector under Documents tab.

Or you can easily create Search applications which provide many search options.

Monitor

In addition to ingesting and searching data from MongoDB into Elasticsearch, you can use the Elastic MongoDB integration (which is different from connector) to ingest logs and metrics and monitor MongoDB clusters.

Note 1: For data sync we have used MongoDB Atlas free tier but there are limitations with free and shared tier for metrics and log ingestion. Metrics commands used by integrations are not supported on the free/shared tier and logs cannot be exported. To demonstrate the feature, MongoDB Atlas Paid tier is used for monitoring.

Note 2: Users used to collect metrics and logs should have a clusterMonitor role to run privileged commands.

Metrics

For ingesting metrics from the MongoDB Atlas cluster, we will use the Elastic MongoDB integration. At a high level use a fleet server (available by default) in Elastic Cloud and install an agent on a host (access required to MongoDB cluster, add host IP in Atlas Network access). MongoDB integration is configured and deployed on the agent which runs Metrics command on MongoDB server to ingest data into Elasticsearch.

Elastic Integration comes with assets which include default dashboards, mappings etc.

For configuration, Atlas host information is required in the following format: mongodb+srv://user:pass@host

Copy host information from “Connect” to MongoDB database.

Elastic Integration configuration:

Metrics Dashboard:

Logs

If you are using a self-managed MongoDB version then you can use MongoDB integration to ingest logs in addition to metrics above. However for MongoDB Atlas which is a managed service, the log path is not exposed so you cannot directly use the integration.

MongoDB Atlas provides an option to push logs to AWS S3 bucket (not available in free tier). Elastic AWS custom logs integration can then pull the MongoDB logs from S3 bucket.

MongoDB configuration:

Note: Follow the prompts on MongoDB console to enable log export to S3

Elastic configuration:

We will use Custom AWS Logs integration in an existing fleet and agent setup which already has MongoDB integration.

AWS integration requires credentials and permission to access AWS resources. There are multiple credentials methods and for this blog we have used Access Key ID/Secret Access Key.

AWS high level steps:

- Create S3 Bucket

- Create SQS Queue and update Access Policy

- Enable Event notification in S3 bucket using SQS queue created

Note: Ensure SQS Access policies are correct and SQS read from S3 bucket.

Toggle on “Collect Logs from S3 Bucket” in Custom AWS Logs integration and provide either AWS S3 bucket ARN configured to get MongoDB logs or SQS queue URL. SQS method is recommended as polling all S3 objects is expensive, instead integration can retrieve logs from S3 objects that are pointed to by S3 notification events read from an SQS queue.

Once deployed, integration will start reading logs from the bucket and ingest in Elasticsearch. Additional field mapping might be required for since it is an integration for custom logs.

Validate the logs in Kibana -> Discover for Data view : logs-*

Check fields : aws.s3.bucket.arn OR aws.s3.bucket.name OR log.file.path to confirm logs are coming from configured s3 bucket only.

Scaling

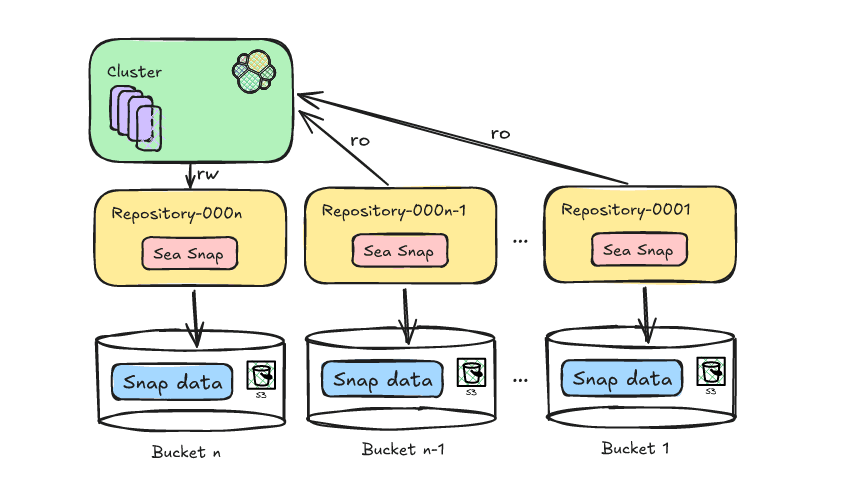

Currently the Elastic MongoDB connector allows 1 database and 1 collection configuration at a time. Generally a large MongoDB deployment will have multiple databases and collections. The connector supports separate configuration of multiple instances of database and collection and allows you to ingest data from different databases and collections. Although you need to create different indices each time, you can have a similar naming convention and create a data view for common search.

You can see how it looks to have multiple instances of collection here:

Note: Connectors run on Enterprise search instances and it is important to monitor its usage and scale as per the load.

MongoDB compatible DB

The Elastic MongoDB connector is compatible and works with databases which are compatible with MongoDB API’s and drivers. For example, you can use the connector to ingest data from Amazon DocumentDB and Azure CosmosDB too in a similar way.

Conclusion

The Elastic MongoDB connector provides a simple way to sync MongoDB data into Elasticsearch deployments. It is relatively easy to implement connectors and take advantage of industry leading search experience with Elastic. Learn more about connectors in our documentation.