From vector search to powerful REST APIs, Elasticsearch offers developers the most extensive search toolkit. Dive into sample notebooks on GitHub to try something new. You can also start your free trial or run Elasticsearch locally today.

The challenge of finding nearest neighbors efficiently in high-dimensional spaces, particularly as datasets grow in size, is one of the most important ones in the context of vector search. As discussed in our previous blog post, brute force nearest neighbor search might be the best choice, when dataset size is limited. On the other hand, as vector dataset size increases, switching to approximate nearest neighbor search can be useful to retain query speed without sacrificing accuracy.

Elasticsearch implements approximate nearest neighbor search via the Hierarchical Navigable Small World algorithm. HNSW offers an efficient way to navigate the vector space, reducing the computational cost while still maintaining high search accuracy. In particular its hierarchical layered structure makes it possible to visit candidate neighbors and decide whether to include them in the final result set with fewer vector distance computations.

However, despite its strengths, the HNSW algorithm can be further optimized for large-scale vector searches. One effective way to enhance HNSW's performance is by finding ways to stop visiting the graph under specific circumstances, called early termination. This blog post explores early termination concepts for HNSW and how they can optimize query execution.

HNSW redundancy

HNSW is an approximate nearest neighbor algorithm that builds a layered graph where nodes represent vectors and edges represent the proximity between vectors in the vector space. Each layer contains incrementally a larger number of graph nodes. When querying, the search traverses this graph, starting at a random entry point and navigating towards the closest neighbors through the layers.

The search process is iterative and expands as it examines more nodes and vectors. This balance between speed and accuracy is central to HNSW, but it can still result in redundant computations, especially when large datasets are involved.

In HNSW, redundant computations primarily occur when the algorithm continues to evaluate new nodes or candidates that provide little to no improvement in finding actual neighbors to a query. This happens because, in standard HNSW traversal, the algorithm proceeds layer-by-layer, exploring and expanding candidate nodes until all possible paths are exhausted.

In particular, this kind of redundancy can arise when the dataset includes highly similar or duplicate vectors, clusters with dense intra-cluster connections, or vectors in very high-dimensional spaces with little intrinsic structure. Such redundancy leads to visiting unnecessary edges, increasing memory usage and potentially slowing search performance without improving accuracy. In high-dimensional spaces where similarity scores decay quickly, some edges often fail to contribute meaningful shortcuts in the graph, resulting in inefficient navigation paths too.

So, in case a number of unnecessary computations can be performed while traversing the graph, one could try to improve the HNSW algorithm to mitigate this issue.

Early Termination FTW

Navigating the solution space is a fundamental concept in optimization and search algorithms, where the goal is to find an optimal solution among a set of possible solutions. The solution space represents all potential solutions to a given problem, and navigating it involves systematically exploring these possibilities. This process can be visualized as moving through a graph where each node represents a different solution, and the objective is to identify the node that best meets the criteria of the problem. Understanding how to effectively navigate the solution space is crucial for solving complex problems that have huge numbers of solutions. Early termination is a generic optimization strategy that can be applied to any such algorithm to make smart decisions about when stopping searching for solutions under certain circumstances.

If any solution is considered 'good enough' to meet a desired criteria, the search can stop and the solution can be considered either a good candidate or an optimal solution. This means some potentially better solutions might remain unexplored, so it's tricky to find a perfect compromise between efficiency and quality of the final solution(s).

Early Termination in HNSW

In the context of HNSW, early termination can be used to stop the search process before all potential candidates nodes (vectors) have been fully evaluated. Evaluating a candidate node means calculating the actual similarity between the query vector and the vector corresponding to the node in the graph that is being processed; for this reason, when skipping a bunch of such operations while traversing each layer, the computational cost of the query can be greatly reduced.

On the other hand, skipping a candidate that would otherwise result in a true nearest neighbor will surely affect the quality of the search results, potentially missing a few candidate vectors that are close to the query vector.

Consequently the trade-off between the efficiency gains and loss in accuracy is to be handled with care. Early termination is useful in case of:

- Sublinear Efficiency: You want to optimize performance in exchange for slightly lower recall.

- High-Throughput Systems: Faster response times are more valuable than the highest accuracy.

- Resource Constraints: Compute or memory limitations make full traversal of the HNSW graph undesirable.

In the context of HNSW there are a number of options for implementing an early termination strategy.

- Fixed candidate pool size: One of the simplest early termination strategies is to limit the size of the candidate pool (e.g., the number of nodes evaluated during the search). In HNSW, the search process is iterative, expanding to more nodes as it progresses. By setting a limit on the number of candidates considered, we can terminate the search early and return results based on only a subset of the total graph. Of course this can be implemented either in a layer-wise fashion or accounting for all the nodes across the whole graph.

- Distance threshold-based termination: Another potentially effective early termination strategy is to make smart decisions based on distance computations between the query vector and the vectors corresponding to HNSW nodes. One could set a threshold based on the distance between the query vector and the current closest vector. If a vector is found whose distance is below a specified threshold, the search can be stopped early, assuming that further exploration is unlikely to yield significantly better results. This goes hand in hand with constraints on the fact that nodes get visited in a smart order, to avoid missing possibly relevant neighbors.

- Dynamic early termination based on quality estimation: A more sophisticated approach is dynamically adjusting the termination criteria based on the "quality" of the results found during each search query. If the search process is converging quickly on high-quality neighbors (e.g., neighbors with very close distances), the algorithm can terminate early, even without hitting a predefined threshold.

The first two strategies fall in the category of "fixed configuration" early termination strategies, so that the search terminates based on fixed constraints that do not take into account query-specific challenges. In fact not all queries are equally hard, some queries require more candidate visit than others, for example, when the distribution of the vectors is skewed. Consequently some query vectors might fall into denser regions of the vector space, so that they have more candidate nearest neighbors, while some others might fall into "less populated regions", making it harder to find their true nearest neighbors.

Because of such situations, early termination strategies that can adapt to the density of the vector space (and consequently to the connectivity of the HNSW graph) seem more attractive for real-life scenarios. Therefore determining the optimal point at which to stop searching for each query is more likely to lead to substantial latency reductions without compromising accuracy. Such kinds of early termination strategies are dubbed adaptive as they adapt to each query instance to decide when to terminate the search process.

For example, an adaptive early termination strategy can utilize machine learning models to predict how much search effort is sufficient for a given query to achieve the desired accuracy. One such a model dynamically adjusts how much of the graph to explore based on the individual query's characteristics and intermediate search results.

Speaking about intermediate search results, they are often powerful predictors of how much further to search. If the initial results are already close to the query, the nearest neighbors are likely to be found soon, allowing for early termination. Conversely, poor initial results indicate a need for more extensive exploration, (see this paper).

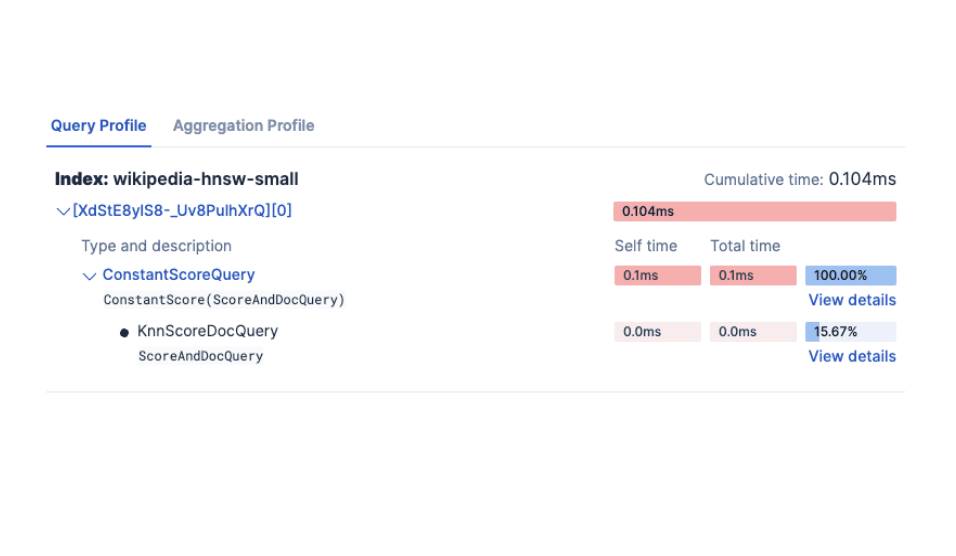

Lucene makes it possible to implement early termination in HNSW by means of the KnnCollector interface that exposes an earlyTerminated() method, but it also offers a couple of fixed configuration early termination strategies for HNSW:

- TimeLimitingKnnCollector makes it possible to stop the HNSW graph traversing when a certain time threshold is met.

- AbstractKnnCollector is a base

KnnCollectorimplementation that stops the graph traversal once a fixed number of graph nodes are visited.

As an additional example, to implement a distance threshold-based termination, we could rely on the minimum competitive similarity recorded by Lucene during HNSW traversal (used to make sure only competitive nodes are explored) and early exit when it falls below a given threshold.

Conclusion

Early termination strategies for approximate KNN can lead to notable speedups while retaining good accuracy, if correctly implemented. Fixed strategies are easier to implement but they might require more tuning and also not work well across different queries. Dynamic / adaptive strategies, on the other hand, are harder to implement but have the advantage of being able to better adapt to different search queries.

Frequently Asked Questions

What is HNSW?

HNSW is an approximate nearest neighbor algorithm that builds a layered graph where nodes represent vectors and edges represent the proximity between vectors in the vector space.

How can the HNSW algorithm be optimized?

One effective way to enhance HNSW's performance is by finding ways to stop visiting the graph under specific circumstances, called early termination.

What is early termination in HNSW?

In the context of HNSW, early termination can be used to stop the search process before all potential candidates nodes (vectors) have been fully evaluated.