Quick start: modules for common log formats

editQuick start: modules for common log formats

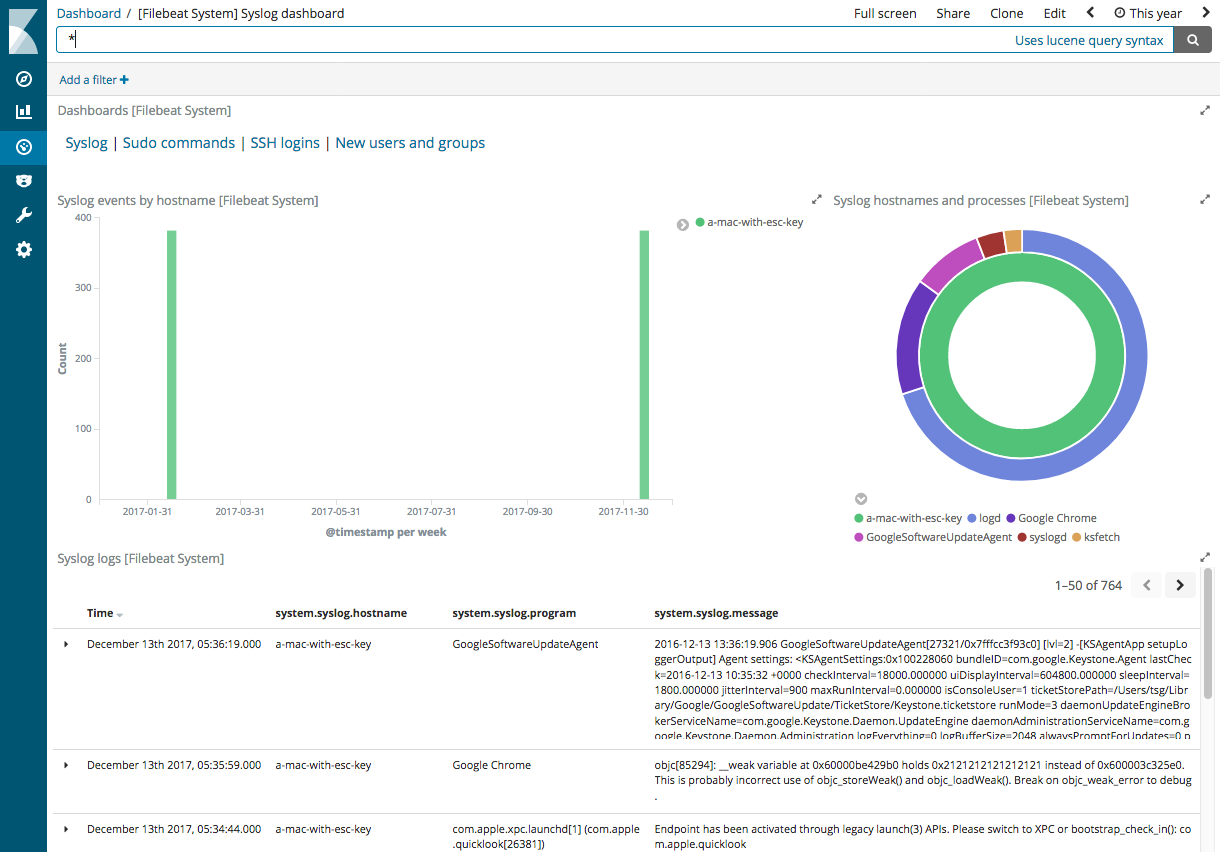

editFilebeat provides a set of pre-built modules that you can use to rapidly implement and deploy a log monitoring solution, complete with sample dashboards and data visualizations (when available), in about 5 minutes. These modules support common log formats, such as Nginx, Apache2, and MySQL, and can be run by issuing a simple command.

This topic shows you how to run the basic modules with minimal extra configuration. For detailed documentation and the full list of available modules, see Modules.

Can’t find a module for your log file type? Follow the numbered steps under Getting Started With Filebeat to set up and configure Filebeat manually.

Prerequisites

editBefore running Filebeat modules:

- Install and configure the Elastic stack. See Getting started with the Elastic Stack.

- Complete the Filebeat installation instructions described in Step 1: Install Filebeat. After installing Filebeat, return to this quick start page.

- Verify that Elasticsearch and Kibana are running and that Elasticsearch is ready to receive data from Filebeat.

Running Filebeat modules

editTo set up and run Filebeat modules:

-

In the

filebeat.ymlconfig file, set the location of the Elasticsearch installation. By default, Filebeat assumes Elasticsearch is running locally on port 9200.-

If you’re running our hosted Elasticsearch Service on Elastic Cloud, specify your Cloud ID. For example:

cloud.id: "staging:dXMtZWFzdC0xLmF3cy5mb3VuZC5pbyRjZWM2ZjI2MWE3NGJmMjRjZTMzYmI4ODExYjg0Mjk0ZiRjNmMyY2E2ZDA0MjI0OWFmMGNjN2Q3YTllOTYyNTc0Mw=="

-

If you’re running Elasticsearch on your own hardware, set the host and port where Filebeat can find the Elasticsearch installation. For example:

output.elasticsearch: hosts: ["myEShost:9200"]

-

-

If Elasticsearch and Kibana are secured, set credentials in the

filebeat.ymlconfig file before you run the commands that set up and start Filebeat.-

If you’re running our hosted Elasticsearch Service on Elastic Cloud, specify your cloud auth credentials. For example:

cloud.auth: "elastic:YOUR_PASSWORD"

-

If you’re running Elasticsearch on your own hardware, specify your Elasticsearch and Kibana credentials:

output.elasticsearch: hosts: ["myEShost:9200"] username: "filebeat_internal" password: "YOUR_PASSWORD" setup.kibana: host: "mykibanahost:5601" username: "my_kibana_user" password: "YOUR_PASSWORD"

This examples shows a hard-coded password, but you should store sensitive values in the secrets keystore.

The

usernameandpasswordsettings for Kibana are optional. If you don’t specify credentials for Kibana, Filebeat uses theusernameandpasswordspecified for the Elasticsearch output.To use the pre-built Kibana dashboards, this user must have the

kibana_userbuilt-in role or equivalent privileges.For more information, see Securing Filebeat.

-

-

Enable the modules you want to run. For example, the following command enables the system, nginx, and mysql modules:

deb and rpm:

filebeat modules enable system nginx mysql

mac:

./filebeat modules enable system nginx mysql

brew:

filebeat modules enable system nginx mysql

linux:

./filebeat modules enable system nginx mysql

win:

PS > .\filebeat.exe modules enable system nginx mysql

This command enables the module configs defined in the

modules.ddirectory. See Specify which modules to run for other ways to enable modules.To see a list of enabled and disabled modules, run:

deb and rpm:

filebeat modules list

mac:

./filebeat modules list

brew:

filebeat modules list

linux:

./filebeat modules list

win:

PS > .\filebeat.exe modules list

-

Set up the initial environment:

deb and rpm:

filebeat setup -e

mac:

./filebeat setup -e

linux:

./filebeat setup -e

brew:

filebeat setup -e

win:

PS > .\filebeat.exe setup -e

The

setupcommand loads the recommended index template for writing to Elasticsearch and deploys the sample dashboards (if available) for visualizing the data in Kibana. This is a one-time setup step.The

-eflag is optional and sends output to standard error instead of syslog.The ingest pipelines used to parse log lines are set up automatically the first time you run the module, assuming the Elasticsearch output is enabled. If you’re sending events to Logstash, or plan to use Beats central management, also see Load ingest pipelines manually.

-

Run Filebeat.

If your logs aren’t in the default location, set the paths variable before running Filebeat.

deb and rpm:

service filebeat start

mac:

./filebeat -e

brew:

filebeat -e

linux:

./filebeat -e

win:

PS > Start-Service filebeat

If the module is configured correctly, you’ll see

INFO Harvester startedmessages for each file specified in the config.Depending on how you’ve installed Filebeat, you might see errors related to file ownership or permissions when you try to run Filebeat modules. See Config File Ownership and Permissions in the Beats Platform Reference for more information.

Set the paths variable

editThe examples here assume that the logs you’re harvesting are in the location expected for your OS and that the default behavior of Filebeat is appropriate for your environment.

Each module and fileset has variables that you can set to change the default behavior of the module, including the paths where the module looks for log files. You can set the path in configuration or from the command line. For example:

To set the path at the command line, use the -M flag. The variable name

must include the module and fileset name. For example:

deb and rpm:

filebeat -e -M "nginx.access.var.paths=[/var/log/nginx/access.log*]"

mac:

./filebeat -e -M "nginx.access.var.paths=[/usr/local/var/log/nginx/access.log*]"

brew:

filebeat -e -M "nginx.access.var.paths=[/usr/local/var/log/nginx/access.log*]"

linux:

./filebeat -e -M "nginx.access.var.paths=[/usr/local/var/log/nginx/access.log*]"

win:

PS > .\filebeat.exe -e -M "nginx.access.var.paths=[c:/programdata/nginx/logs/*access.log*]"

You can specify multiple overrides. Each override must start with -M.

If you are running Filebeat as a service, you cannot set paths from the

command line. You must set the var.paths option in the module configuration

file.

For information about specific variables that you can set for each fileset, see the documentation for the modules.

See Specify which modules to run for more information about setting variables and advanced options.

Load ingest pipelines manually

editThe ingest pipelines used to parse log lines are set up automatically the first

time you run the module, assuming the Elasticsearch output is enabled. If you’re sending

events to Logstash, or plan to use

Beats central management, you need to

load the ingest pipelines manually. To do this, run the setup command with

the --pipelines option specified. If you used the

modules command to enable modules in the modules.d

directory, also specify the --modules flag. For example, the following command

loads the ingest pipelines used by all filesets enabled in the system, nginx,

and mysql modules:

deb and rpm:

filebeat setup --pipelines --modules system,nginx,mysql

mac:

./filebeat setup --pipelines --modules system,nginx,mysql

linux:

./filebeat setup --pipelines --modules system,nginx,mysql

win:

PS > .\filebeat.exe setup --pipelines --modules system,nginx,mysql

If you’re loading ingest pipelines manually because you want to send events to Logstash, also see Working with Filebeat modules.